Welcome to the community forum, @biomedswe

You’re correct in that when the configuration is resolved, the pipeline hasn’t run yet and therefore it can’t resolve variables that are defined during a task run (process is merely a definition, a task is the process instance, what is actually run). As @mahesh.binzerpanchal mentioned, Nextflow .command.* files are supposed to capture what you’re after.

One idea that came to me now is that you could use afterScript to copy/move your logs from the task directory to your logs folder (check docs for afterScript here).

I prepared a PoC below that should work for you.

My folder structure contains a main.nf Nextflow script and a move_logs.sh bash script inside a bin folder.

$ tree -a

.

├── bin

│ └── move_logs.sh

└── main.nf

You’ll find below the content of my Nextflow script:

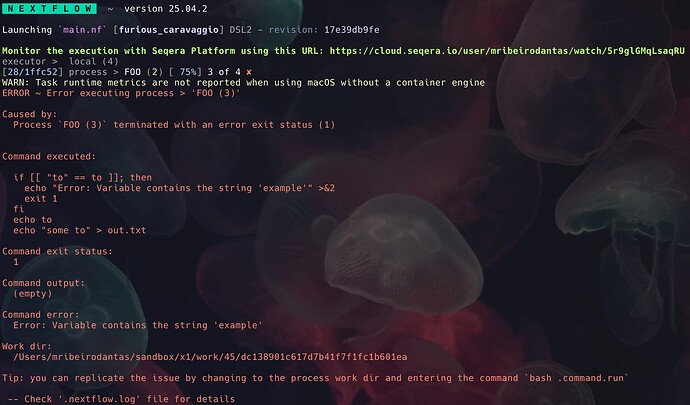

process FOO {

afterScript 'source move_logs.sh'

input:

val string

output:

tuple path('out.txt'), stdout

script:

"""

echo ${string}

echo "some ${string}" > out.txt

"""

}

workflow {

Channel.of('some', 'strings', 'to', 'test').set { my_ch }

FOO(my_ch)

}

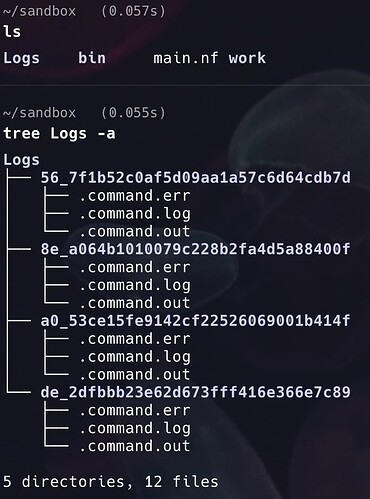

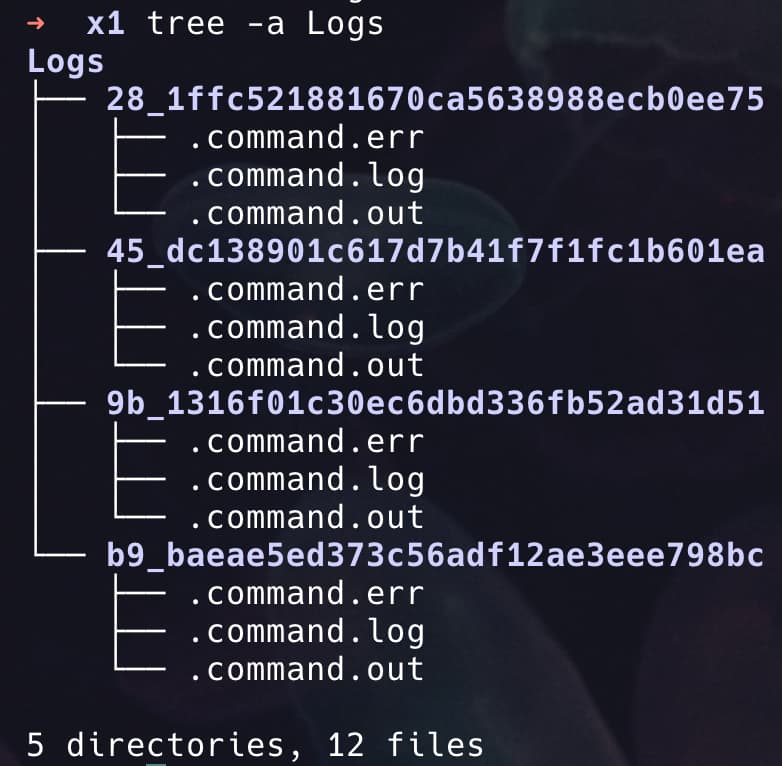

It simply receives a group of strings and prints them to the output and stores to a file named out.txt. There are four strings so we’re talking about four tasks being run and with that 4 task folders. The logs will be there, but I understand you may want to move them somewhere else as the work directory is usually treated as a temporary folder for intermediate files. The afterScript process directive will run the move_logs.sh bash script after the task is finished.

You’ll find the move_logs.sh file content below:

#!/usr/bin/bash

task_path=$(echo "$PWD" | awk -F/ '{print $(NF-1)"_"$NF}')

run_folder=$(echo "$PWD" | rev | cut -d/ -f4- | rev

)

mkdir -p $run_folder/Logs/$task_path

cp .command.err $run_folder/Logs/$task_path

cp .command.out $run_folder/Logs/$task_path

cp .command.log $run_folder/Logs/$task_path

This script basically finds out where you ran your project from and what’s the folder for each task. If you’re running your pipeline from one place but setting a custom work directory to be somewhere else, you must adjust this in the code above. With these two information resolved, we can create the folders (in case they’re not already there) and copy the three log files you’re interested (.err, .out, .log) to this Logs folder, within their respective task directories.

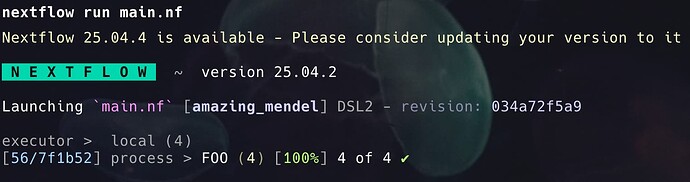

That’s what I get by running the pipeline above: